Setup

library(data.table)

library(here)## here() starts at /Users/davidlucey/Desktop/David/Projects/redwall-analyticslibrary(glue)## Warning: package 'glue' was built under R version 4.1.2library(tictoc)

setDTthreads(percent = 90)

path_to_data <- "~/Desktop/David/Projects/uscompanies/data"

path_to_original <- here::here(path_to_data, "uscompanieslist.csv")Introduction

On a recent side project, we encountered a large (7GB) csv of 30+ million US business names and addresses, which couldn’t be loaded into R, because of corrupted records. While not widely discussed, we have known for some time that it was possible to pipe command line instructions into {data.table}’s fread() by using its “cmd” parameter. However, there were only a few snippets available about how to do this, and most of these were constrained to limited strategies using awk. There were a few times in the past that we used awk, and we sometimes even got it to work, though we often didn’t understand why. awk seems like a great tool, but is like learning an entirely new language.

When we discovered Jeroen Janssens’ Data Science at the Command Line a few months ago, we realized there were a lot more possibilities for solving problems like this one. This book helped us to understand that the strategy of using fread()’s cmd capability might be expanded beyond awk. Unfortunately, the data set does not belong to us, so we cannot share it, but we will demonstrate the methods in case helpful for others.

Tools and Setup

The specific tools we been learning are {xsv}, {rg} (ripgrep), csvkit and scrubcsv. The first two were developed by BurntSushi using Rust, {csvkit} is a Python package, and {scrubcsv} is another Rust package inspired by the first two. We quickly learned that this tool set is a lot easier to install on Mac than Windows (using WSL), because most can be installed with Homebrew, the Mac package manager. We were not able to figure out how to install {xsv} and ripgrep on WSL, but “brew install xsv” and “brew install ripgrep” easily installed the libraries on our Mac.

Since we started our data journey about 5 years ago, managing Python installations has always been a challenge, and we will not discuss this here. Once Python is set up, the third is easy with “pip install csvkit”. Lastly, {scrubcsv} requires one step further, because there is no Homebrew formula, so first Rust and its package manager cargo had to be installed, which again can be accomplished with Homebrew following these instructions. Once installed, {scrubcsv} only requires “cargo install scrubcsv”.

Of the tools, {rg} is grep on steroids, while {xsv} and {csvkit} have many similar capabilities to slice and dice a csv. Though {xsv} is a significantly faster, {csvkit} has a built in cleancsv capability which can be used to solve our problem. {scrubcsv} does only one thing, it drops rows with the wrong number of columns, and it does this very fast. This seems like a more limited solution, but in our case it turns out to be just the ticket.

The Problem

As shown below, when we try to load the data set, we get “Error in fread(”/Users/davidlucey/Desktop/David/Projects/uscompanies/data/uscompanieslist.csv”, : R character strings are limited to 2^31-1 bytes”. We were not the only ones who have encountered this cryptic error, but it seemed the main way to solve it as outlined in this SO post https://stackoverflow.com/questions/68075990/loading-csv-with-fread-stops-because-of-to-large-string, is to ask the owner to reformat it, which wasn’t an option.

# Unsuccessful code

try(fread(path_to_original))## Error in fread(path_to_original) :

## R character strings are limited to 2^31-1 bytesAs the SO poster was asking, it would be nice to be able to instruct fread() to try each and skip the bad rows, but this is not possible (at least from what we have figured out so far). We didn’t know which or how many rows specifically were causing the problem. Since the data set was so large, finding the problem, rows felt like a needle in a haystack, and the usual solution of loading it all into memory and looking around wasn’t possible.

Using csvclean

Like many who were previously scared by the CLI, the first step was to get over the fear of the help manual, cleancsv shown below.

{csvclean} Manual

csvclean -h## usage: csvclean [-h] [-d DELIMITER] [-t] [-q QUOTECHAR] [-u {0,1,2,3}] [-b]

## [-p ESCAPECHAR] [-z FIELD_SIZE_LIMIT] [-e ENCODING] [-S] [-H]

## [-K SKIP_LINES] [-v] [-l] [--zero] [-V] [-n]

## [FILE]

##

## Fix common errors in a CSV file.

##

## positional arguments:

## FILE The CSV file to operate on. If omitted, will accept

## input as piped data via STDIN.

##

## optional arguments:

## -h, --help show this help message and exit

## -d DELIMITER, --delimiter DELIMITER

## Delimiting character of the input CSV file.

## -t, --tabs Specify that the input CSV file is delimited with

## tabs. Overrides "-d".

## -q QUOTECHAR, --quotechar QUOTECHAR

## Character used to quote strings in the input CSV file.

## -u {0,1,2,3}, --quoting {0,1,2,3}

## Quoting style used in the input CSV file. 0 = Quote

## Minimal, 1 = Quote All, 2 = Quote Non-numeric, 3 =

## Quote None.

## -b, --no-doublequote Whether or not double quotes are doubled in the input

## CSV file.

## -p ESCAPECHAR, --escapechar ESCAPECHAR

## Character used to escape the delimiter if --quoting 3

## ("Quote None") is specified and to escape the

## QUOTECHAR if --no-doublequote is specified.

## -z FIELD_SIZE_LIMIT, --maxfieldsize FIELD_SIZE_LIMIT

## Maximum length of a single field in the input CSV

## file.

## -e ENCODING, --encoding ENCODING

## Specify the encoding of the input CSV file.

## -S, --skipinitialspace

## Ignore whitespace immediately following the delimiter.

## -H, --no-header-row Specify that the input CSV file has no header row.

## Will create default headers (a,b,c,...).

## -K SKIP_LINES, --skip-lines SKIP_LINES

## Specify the number of initial lines to skip before the

## header row (e.g. comments, copyright notices, empty

## rows).

## -v, --verbose Print detailed tracebacks when errors occur.

## -l, --linenumbers Insert a column of line numbers at the front of the

## output. Useful when piping to grep or as a simple

## primary key.

## --zero When interpreting or displaying column numbers, use

## zero-based numbering instead of the default 1-based

## numbering.

## -V, --version Display version information and exit.

## -n, --dry-run Do not create output files. Information about what

## would have been done will be printed to STDERR.As we discovered is often the case with UNIX tools, there were not as many walk-through detailed examples of {csvkit} as with many R packages. We found this one particularly cryptic as it seemed unclear about its output, but in hindsight, the -n command mentions “output files” which are created. We were concerned that it might alter our data, so created a backup and ran against that.

# Run previously to verify working, output on disc

system(command = glue::glue("csvclean {path_to_original}"))After about an hour, the final output gives two new csv’s (“uscompanieslist_err.csv” and “uscompanieslist_out.csv”) by default, and leaves the original intact (uscompanieslist.csv). This is good, but means there is a need for a lot of disc space.

## [1] "uscompanieslist_err.csv" "uscompanieslist_out.csv"

## [3] "uscompanieslist.csv"Bad Rows

In “uscompanieslist_err.csv”, csvclean adds two columns, one of which specifies the actual number of rows versus the number expected in each row. It also contains the line number of the original file where the problem was happening, which would have been nice to have earlier while we were hunting for bad rows. The cadence of our bad rows, which is every few thousand, can be seen and why our efforts at trying to load in chunks was problematic (chunks of a few thousand rows in 30 million).

Load uscompanieslist_err.csv Metadata

data <-

fread(here::here(path_to_data, "uscompanieslist_err.csv"), select = 1:2, nrows = 10)

data## line_number msg

## 1: 5554 Expected 28 columns, found 22 columns

## 2: 5593 Expected 28 columns, found 22 columns

## 3: 5594 Expected 28 columns, found 22 columns

## 4: 8150 Expected 28 columns, found 22 columns

## 5: 8151 Expected 28 columns, found 22 columns

## 6: 8152 Expected 28 columns, found 22 columns

## 7: 8153 Expected 28 columns, found 22 columns

## 8: 8154 Expected 28 columns, found 22 columns

## 9: 8155 Expected 28 columns, found 22 columns

## 10: 8156 Expected 28 columns, found 22 columnsThis file still contains rows with a differing number of columns, so still cannot be read by fread(). Here we use {rg} to filter out the remaining bad rows and {xsv} to drop the csvclean’s metadata columns, piped into fread(). In our case, most of the intact rows have 22 columns, instead of the expected 28, so we are guessing this data was somehow tacked on from another source. Although we use {rg} again here, we could have used grep and it probably wouldn’t have been much difference for 1 million rows, but it could also be done with any of the other tools or even with a traditional grep, also in about 10 seconds.

tic()

# Load bad_csvkit_data

bad_csvkit_data <-

fread(cmd = glue::glue(

"rg '22 columns' { here::here(path_to_data, 'uscompanieslist_err.csv') } | xsv select 3-13,17,19,20-21,24"))

toc()## 48.651 sec elapsedAs shown above there are 1070764 in the data set, and column names are lost and have to be manually re-inserted. At first, we were worries that the columns would be badly formatted, mistakenly merging columns, but looking at random samples of rows, this was not the case. A faster alternative with {scrubcsv}. There are also several columns which are missing all data or almost all blank cells. We can also add NULL columns for the ones which are missing.

# Column names

data_names <- c(

"COMPANY_NAME",

"SIC_CODE",

"SIC_DESCRIPTION",

"ADDRESS",

"CITY",

"STATE",

"ZIP",

"COUNTY",

"PHONE",

"FAX_NUMBER",

"WEBSITE",

"EMPLOYEE_RANGE",

"SALES_VOLUME_RANGE",

"CONTACT_FIRSTNAME",

"CONTACT_LASTNAME",

"CONTACT_TITLE"

)

names(bad_csvkit_data) <- data_names

sample <- bad_csvkit_data[sample(5)]# Quick view of final data

sample## COMPANY_NAME SIC_CODE SIC_DESCRIPTION ADDRESS

## 1: Abdelbaki, zoheir a md 8011 PHYSICIANS & SURGEONS 770 w high st # 370

## 2: Ackman, carmela 8111 Attorneys 110 e 42nd st # 1401

## 3: Ackman, carmela 8111 Attorneys 110 e 42nd st # 1401

## 4: Abel-hatzel, wendy 6411 Insurance po box 1780

## 5: Abel-hatzel, wendy 6411 Insurance po box 1780

## CITY STATE ZIP COUNTY PHONE FAX_NUMBER WEBSITE

## 1: Lima OH 45801 Allen 4192264310

## 2: NEW YORK NY 10017 New York 2122531560 ackmanziff.com

## 3: NEW YORK NY 10017 New York 2122531560 ackmanziff.com

## 4: COOS BAY OR 97420 Coos 5412674124

## 5: COOS BAY OR 97420 Coos 5412674124

## EMPLOYEE_RANGE SALES_VOLUME_RANGE CONTACT_FIRSTNAME CONTACT_LASTNAME

## 1: 1 to 10 $500,000 - $1,000,000 Shaheen Abdel

## 2: 1 to 10 $100,000 - $500,000 Caryn Effron

## 3: 1 to 10 $100,000 - $500,000 Alan Goodkin

## 4: 1 to 10 $100,000 - $500,000 Harry D Abel Jr

## 5: 1 to 10 $100,000 - $500,000 Wendy Abel Hatzel

## CONTACT_TITLE

## 1: Manager

## 2: Senior Director

## 3: Managing Director

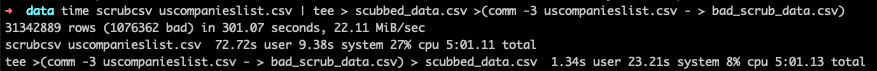

## 4: Insurance Agent

## 5: Insurance AgentAs we mentioned above, csvclean took about an hour to run, but there are probably many other ways to accomplish our goal. Although we didn’t know the exact problem when we first tried csvclean, with hindsight, a better solution would have been {scrubcsv}, because it drops the rows with a differing number of columns, and it does so very quickly. One missing feature of {scrubcsv} is the lack of an output for the bad rows, so we had to capture these in the second line using the CLI comm command. In order not to fill up my disc further, these are not run here, but the total time to run both processes was just 5 minutes, and with a little cleaning, yields the same csv’s as {csvkit}, which took an hour.

CLI to Replicate {csvclean} with {scrubcsv}

Like the bad_csvkit_data, the output of bad_scrub_data still has a few rows with the wrong number of columns, but those are easily dropped with another run of csvscrub (shown in code chunk below) to remove all of the rows which do not have the predominant 22 columns, and using {xsv}, we also drop empty columns with {xsv} select.

Load bad_scrub_data

# Filter, select and load with fread

bad_scrub_data <-

fread(cmd = glue::glue("scrubcsv {path_to_data}/bad_scrub_data.csv | xsv select 1-11,15,17-19,22"))

# Use same names

names(bad_scrub_data) <- data_namesWe can see that the output of the bad rows from the two methods are the same..

# Check if identical

identical(bad_csvkit_data, bad_scrub_data)## [1] TRUEFurther Explorations

Here we show off a few tricks, with this trick scanning to locate Connecticut businesses from the 30 million rows in less than a minute. For example, we are able to stack the two data sets, filter the State of Connecticut and calculate the number of businesses by city. We would have liked to call the output from `fread()`, but in this case, the sub-processes from stacking the two tables seem to not be able to find the file paths from within R, so that is the first example of something which doesn’t work.

time xsv cat rows <(xsv select 1,5,6 ~/Desktop/David/Projects/uscompanies/data/scubbed_data.csv) <(xsv select 1,5,6 ~/Desktop/David/Projects/uscompanies/data/bad_scrub_data.csv) | xsv search -s STATE 'CT' | xsv frequency -s CITY## CSV error: record 24 (line: 25, byte: 4189): found record with 19 fields, but the previous record has 22 fields

## field,value,count

## CITY,Stamford,22620

## CITY,Hartford,21278

## CITY,Norwalk,15085

## CITY,New Haven,14792

## CITY,Bridgeport,12111

## CITY,Danbury,10984

## CITY,Milford,10770

## CITY,Waterbury,9180

## CITY,Greenwich,8710

## CITY,Fairfield,8624

##

## real 0m35.693s

## user 0m47.741s

## sys 0m6.376sWe can count the top 10 most states occurring in the data using xsv frequency and choosing the STATE column, which takes about a minute. The count seem roughly as expected, but a business in this data set can range from a sole proprietor to a multi-national. What we are really seeing is the number of locations which are a business.

tic()

data <-

fread(cmd = glue::glue('xsv select STATE {path_to_data}/scubbed_data.csv | xsv frequency'))

toc()## 35.537 sec elapseddata## field value count

## 1: STATE CA 3605240

## 2: STATE TX 2584658

## 3: STATE FL 2468792

## 4: STATE NY 1972894

## 5: STATE PA 1227555

## 6: STATE IL 1221124

## 7: STATE MI 967717

## 8: STATE NC 945014

## 9: STATE NJ 930482

## 10: STATE VA 798290For a grand finale, we thought it might be nice to find unique rows, but interestingly, we couldn’t find this as a built in capability in either {xsv} or {csvkit}, though both have requests to add it. The traditional sort | uniq would be pretty slow for such a large data set on our small computer, so we found another Rust library {huniq}. Now in the hang of it, there are so many resources available. It looks like if looked at by zip, it took about a minute to find out that there are 26 million unique businesses in the stacked data set, less than the full listed 31 million.

time xsv cat rows <(xsv select 1,7 ~/Desktop/David/Projects/uscompanies/data/scubbed_data.csv) <(xsv select 1,7 ~/Desktop/David/Projects/uscompanies/data/bad_scrub_data.csv) | huniq | xsv count## CSV error: record 24 (line: 25, byte: 4189): found record with 19 fields, but the previous record has 22 fields

## 26431218

##

## real 0m54.845s

## user 1m16.267s

## sys 0m55.631sConclusion

R is so often knocked for being slow, but views as wrapper of other tools like the Rust libraries, it might not be so true. {xsv}, {rg} and {huniq} were not as hard for us to understand as awk and surely perform a lot better. This exercise improved our confidence with the command line, and the tricks from Data Science at the Command Line. After a while referring to the man(ual) or help pages made, along with the usual Google search and Stack Overflow, we were able to figure out most challenges. Combined with fread(), it really starts to seem like a superpower at least with large, messy data sets. We are hoping that connecting the dots here will help others to solve similar problems.